< softmax / sparse_categorical_crossentropy 활용 >

# Reshaping of the dataset

# 이미지 파일을 학습시키려면 28, 28 행열 데이터를 한행의 형태로 변환해 주어야 한다. + 그리고 이미지의 개수가 행개수로 전환되면서 우리가 학습시키는 데이터 프레임 형태가 되는것이다.

# 총 784개의 컬럼을 가진 한행으로 변환해 주어야 하는데 이미 라이브러리로 생성되어있어서 활용하면 된다.

# X_train을 예로들면 해당 함수로 변환을 했을경우 6만개의 이미지이므로 행은 6만 컬럼이 784개가 되는것 => Flatten()을 이용하여 학습가능한 형태로 효율적이게 학습시키는것이고 학습이 종료되면 최종적으로는 3차원 데이터로 학습이 되는것!!!

28*28

784

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense, Flatten

# 3개 이상의 분류를 할때는 softmax 와 compile은 sparse_categorical_crossentropy를 사용한다.

def build_model() :

model = Sequential()

model.add( Flatten() )

model.add( Dense(128, 'relu') )

model.add( Dense (10, 'softmax'))

model.compile('adam', loss='sparse_categorical_crossentropy', metrics=['accuracy'])

return model

# Adding the second layer (output layer)

- units == number of classes (10 in the case of Fashion MNIST)

- activation = 'softmax'

# Comiling the model

- Optimizer: Adam

- Loss: Sparse softmax (categorical) crossentropy

model = build_model()

# Training the model

# early stop 을 이용해서 학습시키세요. pacience 는 10으로 ~

early_stop = tf.keras.callbacks.EarlyStopping(monitor='val_loss', patience=10)

epoch_history = model.fit(X_train, y_train, epochs= 1000, validation_split= 0.2, callbacks= [early_stop])

ㄴ 19번에서 학습 종료됨

# 차트까지 그리세요.

# 1. loss와 val_loss

# 2. accuracy 와 val_accuracy

import matplotlib.pyplot as plt

plt.plot(epoch_history.history['loss'])

plt.plot(epoch_history.history['val_loss'])

plt.legend(['loss','val_loss'])

plt.show()

plt.plot(epoch_history.history['accuracy'])

plt.plot(epoch_history.history['val_accuracy'])

plt.legend(['accuracy','val_accuracy'])

plt.show()

# Model evaluation and prediction

# 최종 시험 (예측)

model.evaluate(X_test, y_test)

313/313 [==============================] - 1s 3ms/step - loss: 0.3909 - accuracy: 0.8761

[0.3909175395965576, 0.8761000037193298]

# 실제 새로운 데이터가 들어왔다는 가정하에 평가를 해본다면?

# 26번째 이미지를 가져와서 예측해 봅시다.

X_test[ 25, : , : ]

array([[0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0.31372549, 0.0745098 , 0. , 0. , 0. ,

0. , 0. , 0. , 0.03137255, 0. ,

0. , 0. , 0. , 0.00392157, 0. ,

0. , 0. , 0. ],

[0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0.00392157, 0.16862745, 0.17647059,

0.45490196, 1. , 0.70196078, 0.49411765, 0.49411765,

0.50196078, 0.70196078, 0.96862745, 0.49411765, 0.21960784,

0.12156863, 0. , 0. , 0. , 0. ,

0. , 0. , 0. ],

[0. , 0. , 0. , 0. , 0. ,

0. , 0.05882353, 0.26666667, 0.22745098, 0.21960784,

0.01960784, 0.36862745, 0.82745098, 0.88627451, 0.72156863,

0.84705882, 0.83921569, 0.45490196, 0.01960784, 0.21960784,

0.28627451, 0.18431373, 0. , 0. , 0. ,

0. , 0. , 0. ],

[0. , 0. , 0. , 0. , 0. ,

0. , 0.23921569, 0.16862745, 0.1372549 , 0.16862745,

0.21176471, 0.06666667, 0.00392157, 0.17647059, 0.46666667,

0.21960784, 0.03137255, 0.03921569, 0.19215686, 0.15686275,

0.14117647, 0.26666667, 0.04705882, 0. , 0. ,

0. , 0. , 0. ],

[0. , 0. , 0. , 0.00392157, 0. ,

0.05882353, 0.30196078, 0.17647059, 0.16862745, 0.17647059,

0.19215686, 0.22745098, 0.21176471, 0.11372549, 0.16862745,

0.20392157, 0.21960784, 0.23921569, 0.19215686, 0.17647059,

0.15686275, 0.21176471, 0.12156863, 0. , 0. ,

0. , 0. , 0. ],

[0. , 0. , 0. , 0. , 0. ,

0.15686275, 0.2745098 , 0.16862745, 0.19215686, 0.2 ,

0.19215686, 0.20392157, 0.21176471, 0.14117647, 0.1372549 ,

0.23921569, 0.2 , 0.19215686, 0.17647059, 0.16862745,

0.16470588, 0.19215686, 0.21176471, 0. , 0. ,

0. , 0. , 0. ],

[0. , 0. , 0. , 0. , 0. ,

0.23921569, 0.28235294, 0.18431373, 0.19215686, 0.19215686,

0.18431373, 0.20392157, 0.15686275, 0.23921569, 0.35686275,

0.14117647, 0.21960784, 0.18431373, 0.18431373, 0.17647059,

0.17647059, 0.21176471, 0.23921569, 0.00392157, 0. ,

0. , 0. , 0. ],

[0. , 0. , 0. , 0. , 0.02745098,

0.28235294, 0.3372549 , 0.23137255, 0.21176471, 0.19215686,

0.16470588, 0.15686275, 0.17647059, 0.15686275, 0.22745098,

0.23137255, 0.14901961, 0.14901961, 0.16862745, 0.15686275,

0.17647059, 0.25882353, 0.22745098, 0.09411765, 0. ,

0. , 0. , 0. ],

[0. , 0. , 0. , 0. , 0.0745098 ,

0.34117647, 0.41960784, 0.25490196, 0.2 , 0.21176471,

0.2 , 0.16862745, 0.20392157, 0.11372549, 0.2 ,

0.22745098, 0.18431373, 0.19215686, 0.19215686, 0.16470588,

0.21176471, 0.26666667, 0.22745098, 0.12941176, 0. ,

0. , 0. , 0. ],

[0. , 0. , 0. , 0. , 0.12941176,

0.36470588, 0.45490196, 0.21176471, 0.23921569, 0.2 ,

0.23921569, 0.19215686, 0.18431373, 0.2 , 0.23137255,

0.16862745, 0.2 , 0.21176471, 0.17647059, 0.21960784,

0.35686275, 0.30196078, 0.23921569, 0.16470588, 0. ,

0. , 0. , 0. ],

[0. , 0. , 0. , 0. , 0.2 ,

0.32156863, 0.54117647, 0.28235294, 0.23921569, 0.21176471,

0.24705882, 0.19215686, 0.1372549 , 0.41960784, 0.48235294,

0.1372549 , 0.21176471, 0.24705882, 0.12941176, 0.25882353,

0.50980392, 0.3372549 , 0.23921569, 0.2 , 0. ,

0. , 0. , 0. ],

[0. , 0. , 0. , 0. , 0.23921569,

0.25882353, 0.65882353, 0.25490196, 0.17647059, 0.22745098,

0.25882353, 0.18431373, 0.17647059, 0.24705882, 0.32156863,

0.15686275, 0.23921569, 0.25490196, 0.12156863, 0.2745098 ,

0.58431373, 0.4 , 0.21176471, 0.23921569, 0. ,

0. , 0. , 0. ],

[0. , 0. , 0. , 0.02745098, 0.25882353,

0.14901961, 0.72156863, 0.34901961, 0.11372549, 0.25490196,

0.2745098 , 0.17647059, 0.19215686, 0.21176471, 0.50196078,

0.12941176, 0.23921569, 0.25882353, 0.15686275, 0.14901961,

0.61176471, 0.50196078, 0.12156863, 0.25490196, 0. ,

0. , 0. , 0. ],

[0. , 0. , 0. , 0.05882353, 0.2745098 ,

0.09411765, 0.74901961, 0.54117647, 0.05490196, 0.2745098 ,

0.32156863, 0.16470588, 0.19215686, 0.34901961, 0.60392157,

0.09411765, 0.2745098 , 0.25882353, 0.15686275, 0.0745098 ,

0.63137255, 0.56862745, 0.08627451, 0.29411765, 0.00392157,

0. , 0. , 0. ],

[0. , 0. , 0. , 0.0745098 , 0.28627451,

0.14901961, 0.70196078, 0.63137255, 0.01176471, 0.26666667,

0.37647059, 0.15686275, 0.2 , 0.26666667, 0.75686275,

0.09411765, 0.32156863, 0.2745098 , 0.17647059, 0.02745098,

0.65490196, 0.63137255, 0.05490196, 0.30980392, 0.03921569,

0. , 0. , 0. ],

[0. , 0. , 0. , 0.12156863, 0.23137255,

0.28627451, 0.58431373, 0.81960784, 0.01960784, 0.25882353,

0.39215686, 0.14901961, 0.22745098, 0.2 , 0.65882353,

0.10196078, 0.36862745, 0.25490196, 0.18431373, 0.01960784,

0.58431373, 0.66666667, 0.03921569, 0.36470588, 0.04705882,

0. , 0. , 0. ],

[0. , 0. , 0. , 0.14901961, 0.14901961,

0.46666667, 0.62745098, 0.49411765, 0.03137255, 0.21176471,

0.39215686, 0.19215686, 0.22745098, 0.16470588, 0.32941176,

0.1372549 , 0.41960784, 0.2 , 0.20392157, 0.05882353,

0.42745098, 0.71372549, 0.02745098, 0.42745098, 0.02745098,

0. , 0. , 0. ],

[0. , 0. , 0. , 0.10196078, 0.15686275,

0.39215686, 0.72156863, 0.32156863, 0.09411765, 0.21176471,

0.52941176, 0.21176471, 0.14901961, 0.43137255, 0.32941176,

0.15686275, 0.49411765, 0.20392157, 0.2 , 0.09411765,

0.2 , 0.77647059, 0.18431373, 0.41960784, 0.05490196,

0. , 0. , 0. ],

[0. , 0. , 0. , 0.10196078, 0.23921569,

0.16470588, 0.90196078, 0.44705882, 0.14901961, 0.20392157,

0.69411765, 0.28235294, 0.16470588, 0.25882353, 0.30980392,

0.20392157, 0.57647059, 0.32941176, 0.23921569, 0.25882353,

0.2 , 0.34117647, 0.39215686, 0.35686275, 0.08235294,

0. , 0. , 0. ],

[0. , 0. , 0. , 0.09411765, 0.22745098,

0.05882353, 0.95686275, 0.37647059, 0.05882353, 0.17647059,

0.48627451, 0.28627451, 0.19215686, 0.2 , 0.2745098 ,

0.23921569, 0.49411765, 0.1372549 , 0.21176471, 0.15686275,

0.20392157, 0.28235294, 0.54117647, 0.30196078, 0.12941176,

0. , 0. , 0. ],

[0. , 0. , 0. , 0.12941176, 0.18431373,

0.05490196, 0.79215686, 0.26666667, 0.10980392, 0.22745098,

0.59215686, 0.31372549, 0.2 , 0.36470588, 0.23137255,

0.31372549, 0.57647059, 0.1372549 , 0.26666667, 0.16862745,

0.12941176, 0.32941176, 0.6745098 , 0.2 , 0.16470588,

0. , 0. , 0. ],

[0. , 0. , 0. , 0.16470588, 0.21176471,

0.05490196, 0.81960784, 0.21960784, 0.23921569, 0.23137255,

0.52156863, 0.42745098, 0.20392157, 0.48627451, 0.16470588,

0.37647059, 0.61176471, 0.12941176, 0.34117647, 0.2745098 ,

0.06666667, 0.45490196, 0.62745098, 0.12941176, 0.17647059,

0. , 0. , 0. ],

[0. , 0. , 0. , 0.14117647, 0.16862745,

0.04705882, 0.64705882, 0.06666667, 0.22745098, 0.2745098 ,

0.45490196, 0.41176471, 0.28235294, 0.14901961, 0.31372549,

0.4 , 0.63921569, 0.08235294, 0.35686275, 0.29411765,

0.01176471, 0.52941176, 0.66666667, 0.12156863, 0.2 ,

0. , 0. , 0. ],

[0. , 0. , 0. , 0.25882353, 0.24705882,

0.24705882, 0.80392157, 0.43921569, 0.43137255, 0.58431373,

0.49411765, 0.31372549, 0.25490196, 0.40392157, 0.40392157,

0.43137255, 0.61176471, 0.30196078, 0.49411765, 0.41176471,

0.3372549 , 0.35686275, 0.61960784, 0.19215686, 0.21960784,

0. , 0. , 0. ],

[0. , 0. , 0. , 0.2745098 , 0.28235294,

0.41960784, 0.02745098, 0.32156863, 0.28235294, 0.26666667,

0.22745098, 0.52941176, 0.6745098 , 0.48627451, 0.8 ,

0.52156863, 0.28235294, 0.2745098 , 0.3372549 , 0.32941176,

0.18431373, 0.05490196, 0.56862745, 0.21960784, 0.25490196,

0. , 0. , 0. ],

[0. , 0. , 0. , 0.25490196, 0.1372549 ,

0.43921569, 0.01960784, 0. , 0. , 0. ,

0. , 0.10196078, 0.28235294, 0. , 0.09411765,

0.0745098 , 0. , 0. , 0. , 0. ,

0. , 0. , 0.65490196, 0.23137255, 0.2745098 ,

0. , 0. , 0. ],

[0. , 0. , 0. , 0.08235294, 0.08627451,

0.2 , 0.03137255, 0. , 0. , 0. ,

0. , 0.05882353, 0.10196078, 0.01176471, 0. ,

0.11372549, 0. , 0. , 0. , 0.00392157,

0. , 0.00392157, 0.21176471, 0.10980392, 0.05882353,

0. , 0. , 0. ],

[0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0.01176471, 0. , 0. , 0.12941176,

0.16470588, 0. , 0. , 0. , 0.00392157,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. ]])

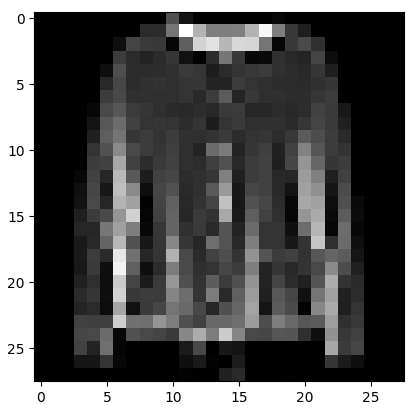

# 이미지를 보여주는 명령어 plt.imshow

plt.imshow(X_test[25, : , : ] , cmap = 'gray')

plt.show()

# 정답을 봐보면 4는 코트이다. y_test에 정답이 저장되어 있으니까 여기서 확인

y_test[25]

4

# 진짜 인공지능도 4로 예측해 내는지 봐보자

model.predict(X_test[25, : , : ])

---------------------------------------------------------------------------

ValueError Traceback (most recent call last)

<ipython-input-31-affac9f9c8a7> in <cell line: 2>()

1 # 진짜 인공지능도 4로 예측해 내는지 봐보자

----> 2 model.predict(X_test[25, : , : ])

1 frames

/usr/local/lib/python3.10/dist-packages/keras/src/engine/training.py in tf__predict_function(iterator)

13 try:

14 do_return = True

---> 15 retval_ = ag__.converted_call(ag__.ld(step_function), (ag__.ld(self), ag__.ld(iterator)), None, fscope)

16 except:

17 do_return = False

ValueError: in user code:

File "/usr/local/lib/python3.10/dist-packages/keras/src/engine/training.py", line 2440, in predict_function *

return step_function(self, iterator)

File "/usr/local/lib/python3.10/dist-packages/keras/src/engine/training.py", line 2425, in step_function **

outputs = model.distribute_strategy.run(run_step, args=(data,))

File "/usr/local/lib/python3.10/dist-packages/keras/src/engine/training.py", line 2413, in run_step **

outputs = model.predict_step(data)

File "/usr/local/lib/python3.10/dist-packages/keras/src/engine/training.py", line 2381, in predict_step

return self(x, training=False)

File "/usr/local/lib/python3.10/dist-packages/keras/src/utils/traceback_utils.py", line 70, in error_handler

raise e.with_traceback(filtered_tb) from None

File "/usr/local/lib/python3.10/dist-packages/keras/src/engine/input_spec.py", line 280, in assert_input_compatibility

raise ValueError(

ValueError: Exception encountered when calling layer 'sequential_1' (type Sequential).

Input 0 of layer "dense_2" is incompatible with the layer: expected axis -1 of input shape to have value 784, but received input with shape (None, 28)

Call arguments received by layer 'sequential_1' (type Sequential):

• inputs=tf.Tensor(shape=(None, 28), dtype=float32)

• training=False

• mask=None

# 에러가 발생된다

# 에러가 뜨는 이유는 학습은 3차원 데이터로 했기 때문에 [60000 , 28, 28] 이형태로

# 그래서 다시 reshape을 통해 3차원으로 만들어준후 예측 시켜야 한다.

# X_test의 shape을 보고 3차원으로 학습

X_test[25, :, :].shape

(28, 28)

y_pred = model.predict(X_test[25, : , : ].reshape(1, 28, 28))

1/1 [==============================] - 0s 45ms/step

y_pred

array([[1.8778677e-03, 8.7685635e-07, 5.5940288e-01, 6.3805523e-06,

4.0627635e-01, 5.2395297e-09, 3.2431990e-02, 5.1623363e-08,

3.3407912e-06, 1.3304719e-07]], dtype=float32)

# 1을 가지고 확률에 따라 10개의 닶으로 출력해준것

y_pred.sum()

0.9999999

# 결과가 10개인데, 가장 숫자가 높은것의 인덱스를 찾으면 된다.

y_pred.max()

0.5594029

# 맥스값의 인덱스를 찾는 함수

y_pred.argmax()

2

# 인공지능은 2 = Pullover 로 잘못인식한걸 알수있다.

## Confusion Matrix 를 확인해야 한다. ##

from sklearn.metrics import confusion_matrix, accuracy_score

y_pred = model.predict(X_test)

313/313 [==============================] - 1s 4ms/step

y_pred = y_pred.argmax(axis=1)

y_pred

array([9, 2, 1, ..., 8, 1, 5])

y_test

array([9, 2, 1, ..., 8, 1, 5], dtype=uint8)

cm = confusion_matrix(y_test,y_pred)

cm

array([[904, 3, 13, 10, 4, 0, 52, 0, 14, 0],

[ 7, 970, 2, 13, 5, 0, 2, 0, 1, 0],

[ 58, 0, 760, 8, 89, 0, 79, 0, 6, 0],

[ 63, 3, 16, 846, 36, 1, 26, 0, 9, 0],

[ 8, 1, 86, 29, 756, 0, 109, 0, 11, 0],

[ 1, 0, 0, 1, 0, 954, 0, 24, 1, 19],

[191, 0, 53, 21, 45, 0, 674, 0, 16, 0],

[ 0, 0, 0, 0, 0, 10, 0, 969, 2, 19],

[ 5, 0, 5, 2, 0, 3, 5, 5, 975, 0],

[ 0, 0, 0, 0, 0, 7, 1, 39, 0, 953]])

np.diagonal(cm).sum() / cm.sum()

0.8761

Stage 5 : Saving the model

# Saving the architecture

# 머신러닝은 sklearn 라이브러리를 사용했고 저장 방법은 joblib 라이브러리를 사용하였다. (이렇게 라이브러리 명칭을 대답할줄 알아야 한다. 이건 외워야함!!!)

# 텐서플로우는 딥러닝을위한 가장 대표적인 라이브러리중 하나 나머지 하나는 페이스북에서 개발

# 텐서플로우는 자체 저장 명령어가 있다.

# 1. 모델을 폴더로 저장하는 방법

model.save('my_model')

# 불러오기

my_model = tf.keras.models.load_model('my_model')

my_model

<keras.src.engine.sequential.Sequential at 0x7cfad2588580>

313/313 [==============================] - 1s 2ms/step

array([[4.46752324e-09, 1.61801045e-10, 1.37093503e-09, ...,

2.12868862e-02, 3.13661914e-08, 9.78708565e-01],

[2.90218828e-04, 1.16264203e-13, 9.99315679e-01, ...,

5.95844762e-10, 1.49318402e-09, 2.46942137e-12],

[1.97104811e-12, 9.99999940e-01, 1.87106104e-17, ...,

1.43382710e-31, 2.70476234e-15, 7.68003626e-29],

...,

[1.67075012e-08, 7.57680977e-16, 7.88335353e-09, ...,

3.36046126e-14, 9.99999702e-01, 4.33934119e-17],

[8.93007557e-10, 9.99995887e-01, 1.42211885e-11, ...,

1.25967238e-19, 7.32785166e-10, 5.95051022e-16],

[3.47720146e-07, 1.65205805e-08, 8.10090341e-07, ...,

2.51163822e-03, 9.56516760e-06, 4.19787739e-06]], dtype=float32)

/usr/local/lib/python3.10/dist-packages/keras/src/engine/training.py:3103: UserWarning: You are saving your model as an HDF5 file via `model.save()`. This file format is considered legacy. We recommend using instead the native Keras format, e.g. `model.save('my_model.keras')`. saving_api.save_model(

313/313 [==============================] - 1s 2ms/step

array([[4.46752324e-09, 1.61801045e-10, 1.37093503e-09, ...,

2.12868862e-02, 3.13661914e-08, 9.78708565e-01],

[2.90218828e-04, 1.16264203e-13, 9.99315679e-01, ...,

5.95844762e-10, 1.49318402e-09, 2.46942137e-12],

[1.97104811e-12, 9.99999940e-01, 1.87106104e-17, ...,

1.43382710e-31, 2.70476234e-15, 7.68003626e-29],

...,

[1.67075012e-08, 7.57680977e-16, 7.88335353e-09, ...,

3.36046126e-14, 9.99999702e-01, 4.33934119e-17],

[8.93007557e-10, 9.99995887e-01, 1.42211885e-11, ...,

1.25967238e-19, 7.32785166e-10, 5.95051022e-16],

[3.47720146e-07, 1.65205805e-08, 8.10090341e-07, ...,

2.51163822e-03, 9.56516760e-06, 4.19787739e-06]], dtype=float32)

# Saving network weights

[array([[ 0.02271761, -0.2944991 , -0.028769 , ..., -0.18715937,

0.19192332, 0.2322523 ],

[-0.16128081, -0.25424585, 0.04745227, ..., -0.15369563,

0.14395876, 0.5085935 ],

[-0.2782905 , -0.41959402, -0.40722004, ..., -0.04274533,

0.57243055, 0.54744416],

...,

[-0.26362264, 0.05695564, 0.08411638, ..., 0.36927092,

0.04152898, -0.2204325 ],

[-0.1484456 , 0.28268155, 0.03771231, ..., 0.34078935,

-0.28301492, 0.23884548],

[-0.11412988, 0.1903053 , 0.01186595, ..., -0.10899477,

0.6243985 , 0.35144082]], dtype=float32),

array([ 0.75177175, 0.15053117, 0.05497442, 0.24826758, 0.03845606,

-0.7300624 , 0.4869345 , 0.1645856 , 0.17036699, 0.42226842,

0.41258374, 0.09502481, 0.29047352, -0.29723576, 0.28523856,

0.3165303 , 0.67179143, -0.1871576 , -0.00964195, 0.19892164,

0.42491758, -0.09066594, 0.00101652, 0.00962504, 0.3880595 ,

0.6492963 , 0.19307303, 0.45541507, -0.02264952, 0.41349387,

0.4517958 , 1.0011144 , 0.59834266, 0.44533563, -0.07932588,

0.3370073 , 0.6208751 , -0.18764398, 0.00407016, 0.17869467,

-0.11198997, -0.0361215 , 0.6790055 , -0.00460031, 0.43578 ,

-0.46660697, 0.46700534, 0.2056715 , 0.66340035, 0.3952845 ,

0.5896454 , 0.06977251, -0.01740523, 0.01954206, 0.35846254,

0.1498976 , 0.67616636, 0.3265582 , 0.18628041, 0.1672784 ,

0.00305305, 0.12351997, 0.38389504, 0.12288803, -0.04645132,

0.46931618, -0.00837302, 0.4796714 , 0.5868239 , 0.17116618,

0.606803 , -0.19410375, 0.34444407, -0.53573734, 0.27141973,

0.03390748, -0.15060744, 0.14072667, 0.56889963, 0.40626645,

0.50931984, 0.16865733, 0.05080958, 0.48633102, 0.28172195,

0.6091963 , 0.2564431 , 0.37471884, 0.9118793 , 0.29310408,

-0.09859752, 0.02514499, -0.21432124, 0.20730704, 0.03468078,

0.8103517 , 0.47006375, 0.38234246, -0.31862065, -0.39394513,

0.24012381, -0.26132187, -0.00850173, 0.04642827, -0.26364726,

-0.02267468, 0.20497516, 0.33909145, 0.6142679 , -0.01869328,

0.27655303, 0.21719427, 0.16408785, 0.45002368, 0.08043167,

0.13827091, 0.6299317 , -0.55418956, 0.70523894, 0.49942005,

0.0654535 , 0.11457346, -0.00878259, -0.3879141 , 0.30698878,

-0.4051954 , 0.583928 , 0.15949349], dtype=float32),

array([[-0.7504998 , 0.55274314, -0.92340475, ..., 0.4010444 ,

-0.07803065, -0.11273979],

[-0.0514105 , -0.12080448, 0.14470707, ..., 0.12397166,

0.30801663, 0.11526827],

[ 0.1977766 , 0.4952558 , 0.21364571, ..., -1.2675058 ,

-0.3558461 , -0.86395127],

...,

[ 0.05692434, -0.5130418 , 0.06031365, ..., -0.44894597,

0.28305897, 0.39578396],

[ 0.17060135, -0.1262132 , -0.41282275, ..., -1.1019056 ,

-1.171807 , 0.27185997],

[-0.5375802 , -0.5694138 , 0.24222554, ..., -0.28896615,

0.097293 , -0.29430112]], dtype=float32),

array([-0.05129086, -0.35894313, 0.17791821, 0.30031106, -0.34605718,

0.07993025, 0.1875695 , 0.12199341, -0.10508378, -0.43523654],

dtype=float32)]

다음 게시글로 계속

'DEEP LEARNING > Deep Learning Library' 카테고리의 다른 글

| DL(딥러닝) : Augmentation로 학습된 AI Transfer Learning & Fine Tunning (2) | 2024.05.01 |

|---|---|

| DL(딥러닝) : 데이터 증강 (Augmentation) 학습 (0) | 2024.04.30 |

| DL(딥러닝) : CNN (Conv2D, MaxPooling2D, Flatten, Dense) 필터링으로 정확도 높이기 (0) | 2024.04.30 |

| DL(딥러닝) : Tensflow의 Fashion-MNIST 활용(DNN) (1) (0) | 2024.04.30 |

| Deep Learning 개념 정리 (0) | 2024.04.16 |